What is Unsloth

Unsloth is a Python library that allows you to fine tune models without using silly amounts of compute. It does this by using smaller numeric precision.

"Almost everyone here is doing load_in_4bit. You load the model as a (dynamically) quantized 4bit version, then create an (uninitialized) bf16 QLoRA train said QLoRA merge the QLoRA onto the quantized model, generating an fp16 or bf16 model (up-quantized). [Optionally,] quantize it back down to your desired level" [Discord]

"LORAs are always fp16" [Discord]

AWQ is Activation-aware Weight Quantization "preserves a small fraction of the weights that are important for LLM performance to compress a model to 4-bits" HuggingFace.

Models

What size model can I fit into my GPU's VRAM?

A "safe bet would be to divide by 1.5 - 2x your total available memory (ram + vram) to get the B parameters vram / 1.5 or 2 to get the active params, so for example, I have 8gb vram i can load a 8b 4bit quantized model directly onto my gpu because of its relatively small approx 4-5gb vram footprint." [Discord]

This sentiment seems popular at the moment:

Hyperparameters

You want a rank "that is large enough to imitate the writing style and copy the knowledge from our instruction samples. You can increase this value to 64 or 128 if your results are underwhelming.

"The importance of the reference model is directly controlled via a beta parameter between 0 and 1. The reference model is ignored when beta is equal to 0, which means that the trained model can be very different from the SFT one. In practice, a value of 0.1 is the most popular one, but this can be tweaked"

LLM Engineering Handbook

In the event that you're not "able to achieve consistently accurate answers to my questions... the model's responses are sometimes correct and sometimes incorrect, even for questions directly from the fine-tuning dataset", the advice is:

"You may want to up the rank and the alphaRank = how many weights it effects. Alpha is how strong they are effected. PEFT only effects the last few layers" [Discord]

(PEFT is Parameter Efficient Fine Tuning. LORA is just one of these methodologies.)

"Alpha should at least equal to the rank number, and rank should be bigger for smaller models/more complex datasets; it usually is between 4 and 64." [Discord]

Batchsize takes up a lot of VRAM so if you are having OOMs, choose smaller batches. This will mean training takes longer. To counter this, you can increase the of the gradient accumulation. This in effect batches the batches and writes back deltas to the matrix less often.

Data

Data makes or breaks an LLM. An old hand who goes by the name MrDragonFox on Discord says:

"Most problems are really in the data it self has to be prepped. 80% of the work is there" [Discord]"You will need good data. ML 101: garbage in, garbage out. That is a science it it self." [Discord]"Over 80% of the moat is in the data - once you have that - you can start with experimenting" [Discord]

It's a view echoed in LLM Engineer's Handbook: "In most use cases, creating an instruction dataset is the most difficult part of the fine-tuning process."

Cleaning the data is essential:

"Avoid abbreviations if you can. As a general rule, you shouldn't expect [a] model to understand and be able to do a task a human with the same context would be unable to accomplish" - etrotta, Discord

But quantity is not quality. In general, "if I have 30k samples (user-assistant), will finetuning a base or instruct model result in a strictly better model?"

"No. Fine tuning can worsen the model's performance if your data is bad or poorly formatted. Even if the data is good, it could cause the model to 'forget' things outside of the dataset you used to fine tune it (although that is a bit less likely with LoRA). Fine tuning will make the outputs closer to whatever you trained it on. For it to make a better model, "whatever you trained it on" must be better than what it's currently responding and follow the same structure you will use later." [Discord]

Overfitting and underfitting

"It's very normal, it's overfitting" [Discord]

"Underfitting is probably seen more often as a common phenomenon where a low rank model fails to generalize due to a lack of learnable params

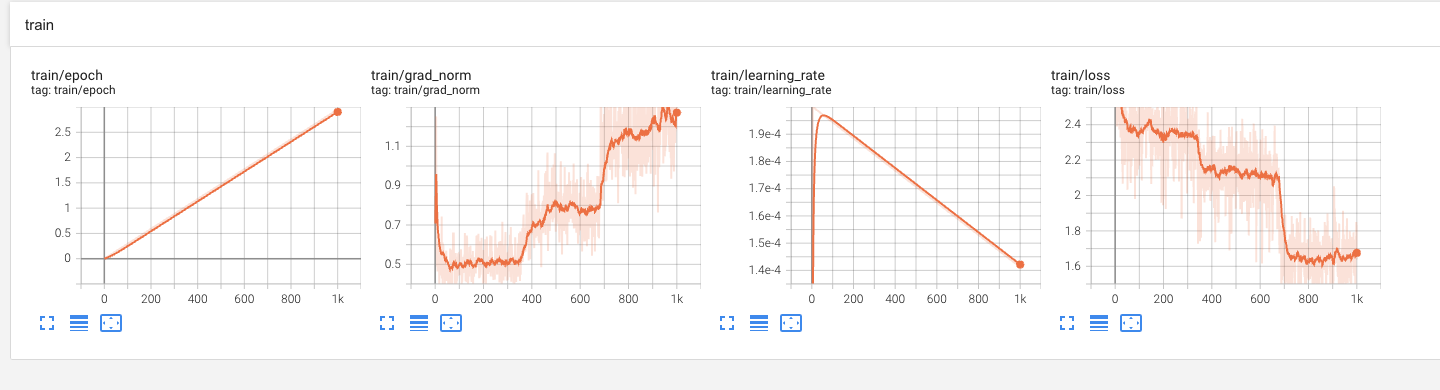

"Generally speaking you are looking for a smooth descent of loss and a gradual convergence of around 0.5. Making data more diverse and novel compared to what the model has seen already is good to combat overfitting and reducing LR/epochs can help" [Discord]

Increasing rank and alpha should be included here.

Apparently this does not apply to RSLoRA (Rank Stabilisation LoRA) which addresses training instability and performance degradation issues that can arise in low-rank adaptation methods when fine-tuning on complex datasets..

"I think for RSLoRA, alpha is sqrt(hidden_size), not sqrt(r) as claimed in the blog post. You can have whatever LoRA size (rank) you want for GRPO, with or without RS. But note that for GRPO it's usually a good idea to have max_grad_norm=0.1 (or some other low value) because GRPO models tend to go insane if you overcook them. It's always either a=r or a=sqrt(hidden_size) if use_rslora=True"

If a fine tuned model doesn't zero shot a relatively simple question it was trained it on, you "might need more examples or deeper rank" [Discord]

No comments:

Post a Comment