We have several ML models for which we calculate the odds (the coefficients in a linear regression model) and the risks (the difference in probabilities between the factual and the counterfactual).

Previously, we saw that for one of our six models, the sign of the odds and risks differed when they should always be the same.

Fortunately, we caught this by putting a transform into our pipeline that takes the output of both the linear regression model and factual/counterfactual probabilities and simply checks them.

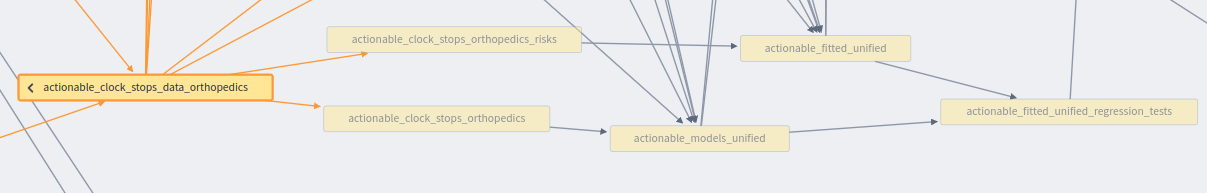

|

| Part of our ML pipeline. Some transforms create production data, others diagnostic. |

In the graph above, anything with _regression_tests appended is a diagnostic transform while everthing else is production data that will ultimately be seen by the customer.

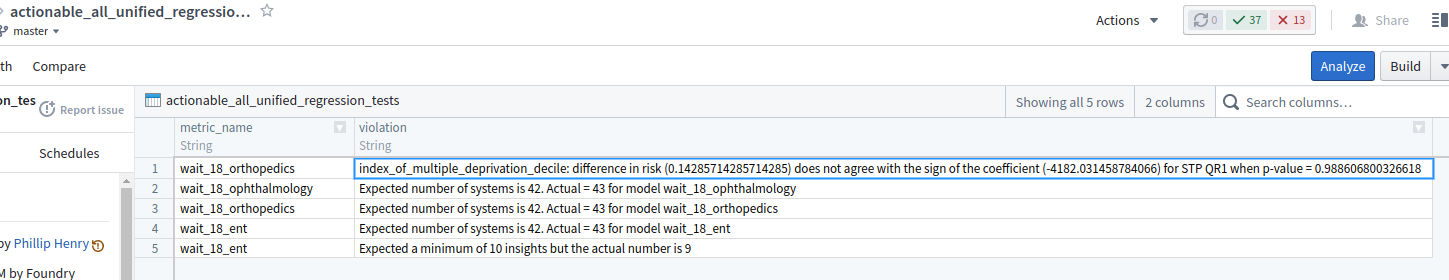

Recently, a diagnostic test was reporting:

difference in risk (0.14285714285714285) does not agree with the sign of the coefficient (-4182.031458784066) for STP QR1 when p-value = 0.988606800326618

|

| Transform containing pipeline diagnostic data. |

Aside: there are other violations in this file but they're not unexpected. For instance, there are 42 health regions in England but the data has some random rubbish from the upstream data capture systems. The last row is due to us expecting at least 10 statistically signifcant outputs from our model when we only found 9. That's fine, we'll turn up the squelch on that one. A red flag would be if the number of insights dropped to zero. This happened when we needed the outcome to be binary but accidentally applied a function of type ℕ => {0,1} twice. Oops.

In a previous post, we found the problem was the "risks" and the "odds" transforms, although using the same input file, were consuming that file at significantly different times. The problem manifested itself when most regions for that one model had different signs. But this single diagnostic we see in the violations above is different. Only one region (QR1) differs. All the others in the data set were fine.

Looking at the offending region, the first things to note was that we had very little data for it. In fact, of the 42 health regions, it had the least by a large margin.

|

| Number of rows of data per health region for a particular model |

So, the next step was to build a Python test harness and using these 127 rows and run the data against production code locally. To run this on my laptop is so much easier as I can, say, create a loop and run the model 20 times to see if there was some non-determinsm happening. Apart from tiny changes in numbers due to numerical instability, I could not recreate the bug.

So, like in the previous post, could my assumption that the data is the same for both risk and odds calculations be flawed? The pipeline was saying that the two descendant data sets were built seconds after the parent.

|

| Both risk and odds data descend from the same parent |

Could there be some bizarre AWS eventual consistency issue? This seems not the case as S3 read/write consistency now has strong consistency (see this AWS post from December 2020).

Conclusion

Why just one region in one model has a different sign is still a mystery. Multiple attempts to see the same bug have failed. However, since there is so little data for this region, the p-values are very high (c. 0.99) we disregard any insights anyway. We'll just have to keep an eye out for it happening again.

No comments:

Post a Comment